Artificial intelligence (AI) is growing rapidly, but its massive energy demands and computational costs are becoming unsustainable. Now, a surprising solution is emerging from the world of quantum physics: tensor networks. Originally developed to handle complex interactions between particles, these mathematical structures are proving remarkably effective at compressing AI models, reducing energy consumption, and even making AI more accessible.

The Bottleneck: Bloated AI Models

Large language models (LLMs) like ChatGPT are notoriously resource-intensive. Their size and complexity require vast amounts of energy to train and run, driving some tech companies to consider extreme solutions like dedicated small nuclear power plants to keep data centers online. This isn’t just an environmental concern; it also limits where AI can be deployed.

The core problem is dimensionality. As AI models tackle more complex tasks, the number of variables explodes, making storage and processing impossible. Physicists solved this decades ago with tensor networks.

Tensor Networks: A Physics-Based Compression Solution

Tensor networks break down colossal datasets into smaller, more manageable components. Imagine a giant sausage that’s too big to cook at once; twisting it into perfectly portioned hot dogs makes it grill-ready. Similarly, tensor networks dissect massive tensors (multi-dimensional arrays of numbers) into linked, smaller tensors.

The key advantage? They preserve accuracy while significantly reducing size. Multiverse Computing, a startup co-founded by physicist Román Orús, has already demonstrated this with the Llama 2 7B model. Their CompactifAI technique compresses the model by over 90%, from 27GB to just 2GB, with a minimal accuracy drop.

Beyond Compression: A New AI Architecture

The long-term vision is even bolder: building AI models from the ground up using tensor networks, bypassing traditional neural networks entirely. Neural networks, while powerful, are energy-hungry and opaque. Tensor networks offer the potential for faster training and more transparent inner workings.

Miles Stoudenmire of the Flatiron Institute believes this approach could unlock “latent power” in AI, allowing it to run efficiently on personal devices without relying on cloud connections. Imagine AI-powered refrigerators or washing machines operating offline.

How It Works: The Curse of Dimensionality and Its Cure

The “curse of dimensionality” is the idea that as data complexity rises, storage becomes impossible. A spreadsheet is a 2D matrix; tensors generalize this to multiple dimensions. Consider tracking 100,000 people’s pizza preferences (100 toppings, 100 sauces). The resulting tensor would have one billion numbers, but still manageable. However, add more variables (crust, cheese), and the size balloons exponentially.

Tensor networks solve this by representing a giant tensor as a network of smaller ones. Correlations between data points are key. For instance, people who like white mushrooms also like cremini. By eliminating redundancy, tensor networks compress the model without sacrificing performance.

Real-World Results and Future Prospects

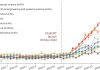

The benefits are already being seen in practice. Sopra Steria found that Multiverse’s compressed version of Llama 3.1 8B used 30–40% less energy. Researchers at Imperial College London have shown that tensor network compression can even improve accuracy compared to full-size models, as large datasets often contain irrelevancies filtered out by the technique.

The ultimate goal is to move beyond compression and create entirely new AI architectures based on tensor networks. This approach could drastically reduce training times (one model demoed trained in 4 seconds compared to 6 minutes for a neural network counterpart) and make AI models more understandable.

Tensor networks aren’t just a compression trick; they represent a fundamental shift in how we build and deploy AI. If successful, this could unlock a future where powerful AI is energy-efficient, accessible, and transparent.